Robot taught to smile and frown through self-guided learning

Washington, July 9 (ANI): Scientists at the University of California, San Diego, have revealed that a hyper-realistic Einstein robot has learnt to smile and make facial expressions through a process of self-guided learning.

The researchers say that they took the aid of machine learning to "empower" their robot to learn to make realistic facial expressions.

"As far as we know, no other research group has used machine learning to teach a robot to make realistic facial expressions," said Tingfan Wu, the computer science Ph.D. student from the UC San Diego Jacobs School of Engineering who presented this advance on June 6 at the IEEE International Conference on Development and Learning.

The researchers have even uploaded a video showing the Einstein robot head performing asymmetric random facial movements as a part of the expression learning process on the website YouTube.

The faces of robots are increasingly realistic, and the number of artificial muscles that controls them is rising.

It was in light of this trend that the researchers from the Machine Perception Laboratory are studying the face and head of their robotic Einstein, hoping that their work may help them find ways to automate the process of teaching robots to make lifelike facial expressions.

According to them, the Einstein robot they worked on has about 30 facial muscles, each moved by a tiny servo motor connected to the muscle by a string.

The researchers point out that developmental psychologists speculate that infants learn to control their bodies through systematic exploratory movements, including babbling to learn to speak.

Initially, these movements appear to be executed in a random manner as infants learn to control their bodies and reach for objects.

"We applied this same idea to the problem of a robot learning to make realistic facial expressions," said Javier Movellan, the senior author on the paper presented at ICDL 2009 and the director of UCSD's Machine Perception Laboratory, housed in Calit2, the California Institute for Telecommunications and Information Technology.

The research team may have achieved promising results, but they admit that some of the learned facial expressions are still awkward. One potential explanation is that their model may be too simple to describe the coupled interactions between facial muscles and skin.

To begin the learning process, the UC San Diego researchers directed the Einstein robot head to twist and turn its face in all directions, a process called "body babbling".

During that period, the robot could see itself on a mirror and analyse its own expression using facial expression detection software created at UC San Diego called CERT (Computer Expression Recognition Toolbox).

That provided the data necessary for machine learning algorithms to learn a mapping between facial expressions and the movements of the muscle motors.

After the robot had learnt the relationship between facial expressions and the muscle movements required to make them, the researchers made it learn to make facial expressions it had never encountered.

For example, the robot learned eyebrow narrowing, which requires the inner eyebrows to move together and the upper eyelids to close a bit to narrow the eye aperture.

"During the experiment, one of the servos burned out due to misconfiguration. We therefore ran the experiment without that servo. We discovered that the model learned to automatically compensate for the missing servo by activating a combination of nearby servos," the authors wrote in the paper presented at the 2009 IEEE International Conference on Development and Learning.

"Currently, we are working on a more accurate facial expression generation model as well as systematic way to explore the model space efficiently," said Wu, the computer science PhD student.

Wu concedes that his team's "body babbling" approach may not be the most efficient way to explore the model of the face.

While the primary goal of this work was to solve the engineering problem of how to approximate the appearance of human facial muscle movements with motors, the researchers say this kind of work could also lead to insights into how humans learn and develop facial expressions. (ANI)

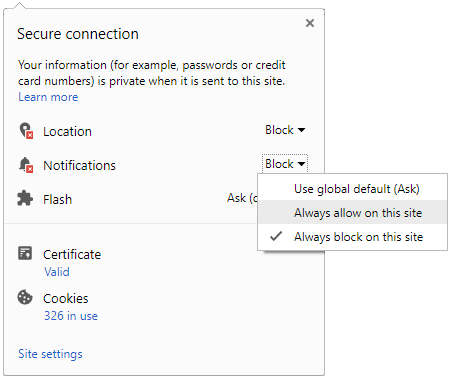

Click it and Unblock the Notifications

Click it and Unblock the Notifications